As Quality Assurance Engineers at zen8labs, a global technology consultancy, our journey is marked by navigating through persistent challenges inherent in software testing. From limited testing time due to development delays or identifying issues in real user conditions, our experiences highlight the dynamic nature of quality assurance. Code refactoring introduces complexities and predicts issues in live user environments demanding constant adaptability. Each challenge prompts us to evolve our strategies for efficient testing.

Last-minute changes in requirements

One of the big challenges we often face in quality assurance at zen8labs is dealing with sudden changes in requirements. These changes can often mess up the whole software development and testing process, creating uncertainties that might affect the overall product quality. When requirements get changed late in the development process, it can set off a chain reaction, making us rethink our test plans and cases, sometimes even forcing us to reevaluate our whole testing strategy.

This situation can seriously impact project timelines and budgets because we might have to stretch our testing efforts to fit in with the changes, risking not testing everything thoroughly or accidentally introducing bugs. To handle these last-minute requirement changes, it is key for everyone involved to talk and work together. Having a solid structure for managing changes, doing regular checks, and being flexible in our approach can help us adapt better to the project’s twists and turns. Plus, proper documentation and regularly checking for potential issues can give us a heads-up on possible problems, letting us take preemptive action to avoid chaos and help zen8labs to deliver a top-notch product.

Insufficient test data

Our Q.A. team at zen8labs have been tackling the challenge of not having enough test data, which has become a recurring issue. This problem pops up when the datasets we’ve got for testing fall short in representing all the different scenarios and conditions the application might encounter in real-world use. The lack of variety and volume in test data puts a bump in our ability to thoroughly check the strength and reliability of the software across a wide range of situations.

Consequently, we often find ourselves in the maze of incomplete test data coverage. Striving to make sure our testing efforts capture the complexity and variability inherent in actual user interactions with the application. Navigating this challenge has been crucial in boosting the effectiveness of our testing processes at zen8labs and ensuring we catch potential issues before releasing the software.

To address this challenge, zen8labs’s QA engineers can turn to the practical solution of using simulated data. Simulated data involves creating data that mimics the characteristics and patterns of real-world data, all without including sensitive or confidential information. The utilization of simulated data comes with several benefits in overcoming the obstacles linked to insufficient or inaccurate test data:

- Data generation: Easily generated mock data can simulate various scenarios and conditions, enabling QA Engineers to craft a diverse set of test cases covering a wide range of potential inputs and use cases.

- Data variability: With mock data, QA teams can introduce variability to imitate different user profiles, behaviors, and environmental conditions. This variability aids in testing the application’s resilience and adaptability to diverse data inputs.

- Data privacy and security: Mock data eliminates concerns related to privacy and security by avoiding the use of real user information. This is especially crucial when testing applications that handle sensitive data.

- Reproducibility: Mock data ensures the reproducibility of test scenarios, allowing consistent testing across different environments and iterations. This reproducibility is essential for reliably identifying and fixing issues.

- Testing edge cases: By tailoring mock data to represent extreme or edge cases, Q.A Engineers can evaluate how well the application performs under less common but critical scenarios, ensuring robustness and reliability.

- Automation support: Mock data is well-suited for automated testing scenarios. Automation frameworks can easily integrate with mock data generation tools, facilitating efficient and scalable testing processes.

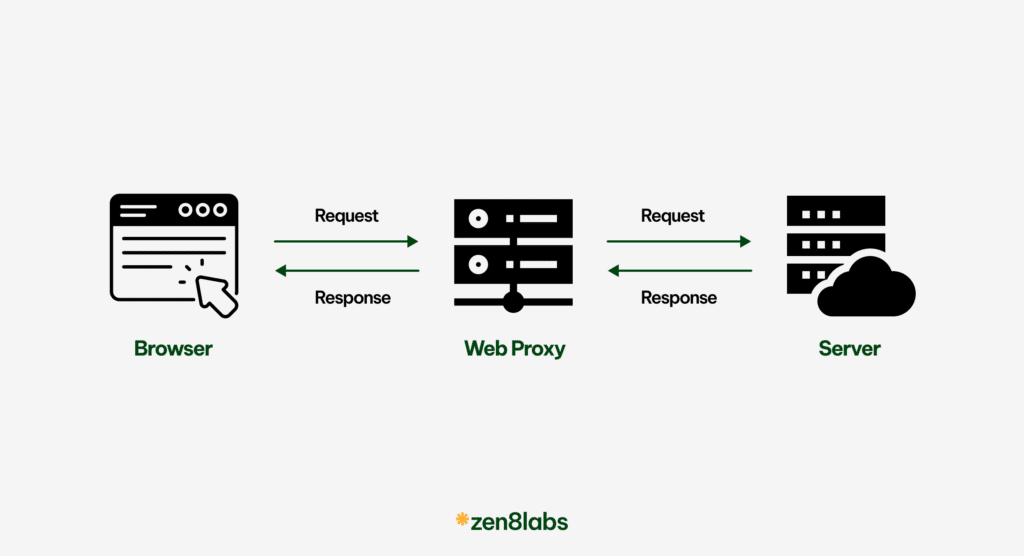

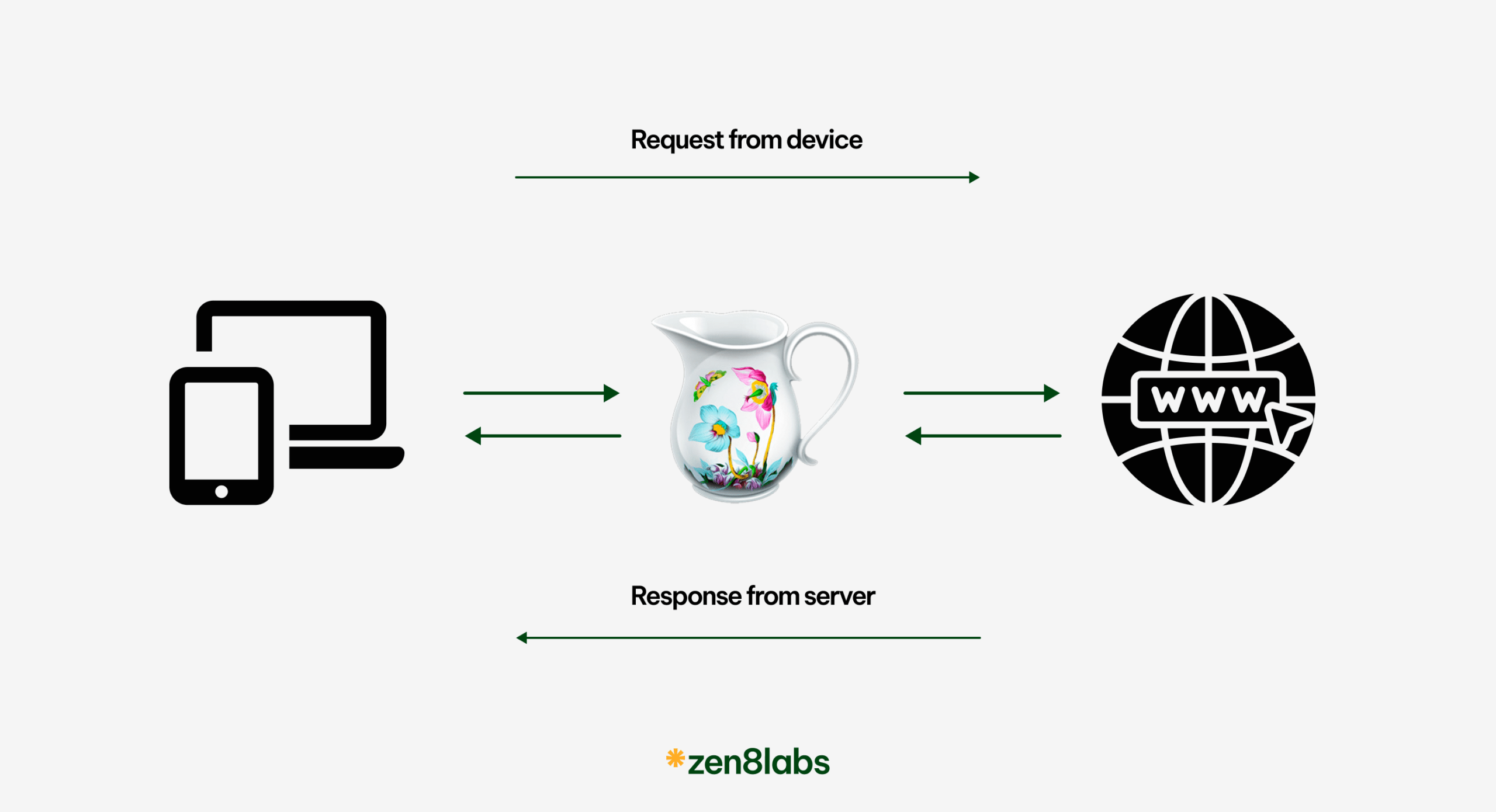

Q.A engineers and developers at zen8labs enhance testing capabilities by utilizing specialized tools such as Postman’s mock server, Charles proxy, and Proxyman. These tools collectively simulate API interactions, intercept and replace actual data with mock data, and facilitate manipulating network requests. By harnessing the power of these tools, our team can effectively simulate diverse scenarios, test edge cases, and ensure applications handle different data types gracefully.

Issues that only happen under real user conditions

Dealing with this challenge is complex due to the unpredictability of software issues in live user environments. Unlike controlled testing, real user conditions introduce diverse variables—such as devices, network speeds, and user behaviors—that are challenging to replicate accurately before release. These issues can impact user satisfaction and harm the application’s reputation. To address this challenge, we take a multifaceted approach, incorporating real-world scenarios into test cases and employing exploratory testing methodologies. These strategies strengthen our ability to uncover and address issues that may emerge only under the dynamic conditions of actual user usage. To complement this, we utilize a robust toolkit, integrating logging systems like Firebase, Mixpanel, and New Relic, along with real device cloud services and simulators to mimic diverse device behaviors.

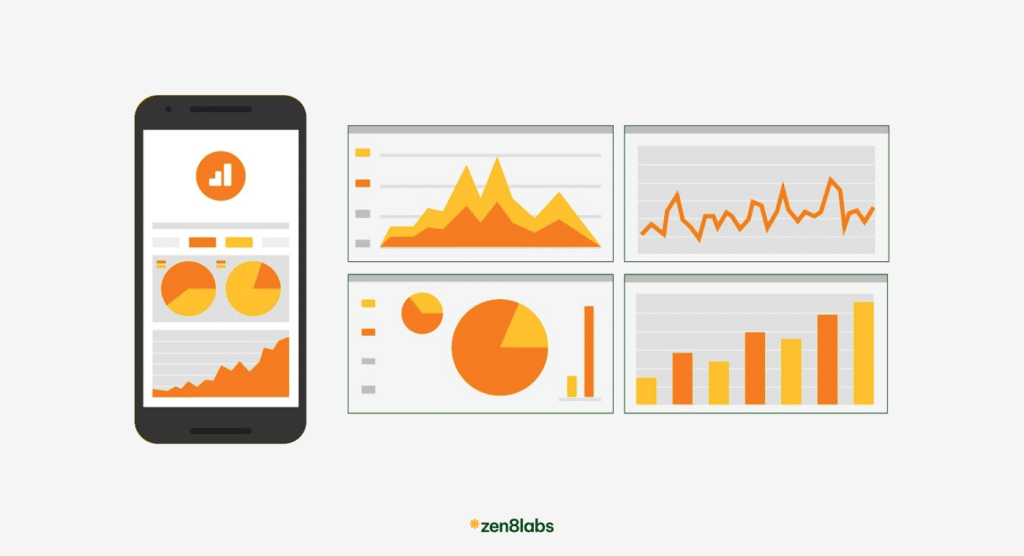

Logging systems

- Firebase: Provides real-time analytics, enabling QA teams to monitor user interactions and track application performance. Detailed event logging helps identify patterns and potential issues under diverse user conditions.

- Mixpanel: Specializes in tracking user behavior and interactions within an application. Analyzing user journeys and feature usage, Q.A Engineers gain insights into potential issues tied to specific user scenarios.

- NewRelic: Excels in application performance monitoring, offering visibility into backend performance. It helps identify bottlenecks and issues that might arise under real user conditions, allowing for targeted triage.

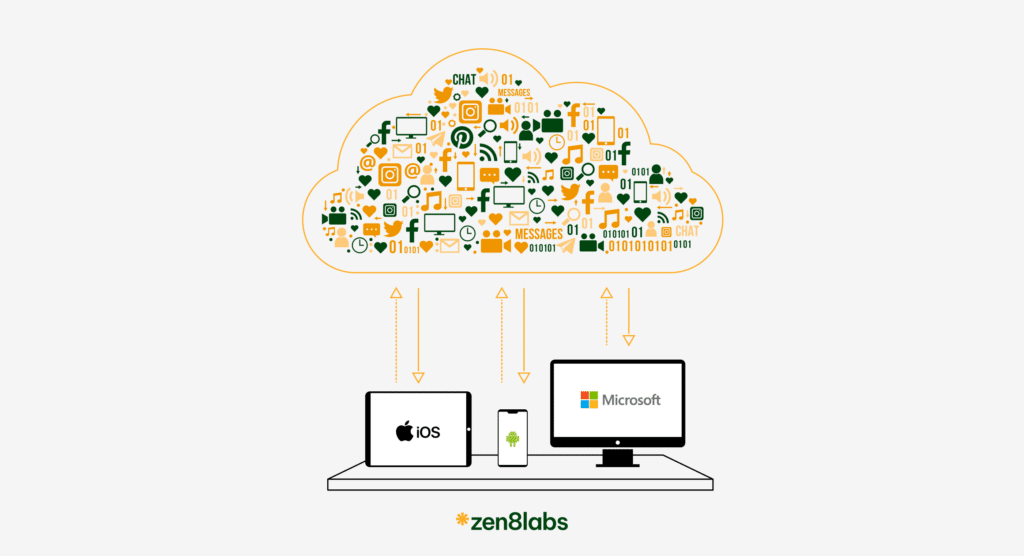

Real device cloud device or simulator

- Real device cloud service: These services provide a comprehensive range of real devices for testing across various operating systems and device models. Q.A Engineers execute test scenarios on real devices to closely mimic user conditions and identify device-specific issues.

- Simulator: Simulators and emulators allow Q.A teams to mimic the behavior of different devices and models in controlled environments. They enable testing applications under various conditions, helping identify issues that might arise on specific devices.

By incorporating these tools into our testing strategy, we established a comprehensive approach to addressing issues that arise exclusively under real user conditions. The synergy of logging systems for detailed analytics and real device testing using cloud services and simulators ensures thorough vetting of our software across diverse scenarios, resulting in a more resilient and user-friendly product.

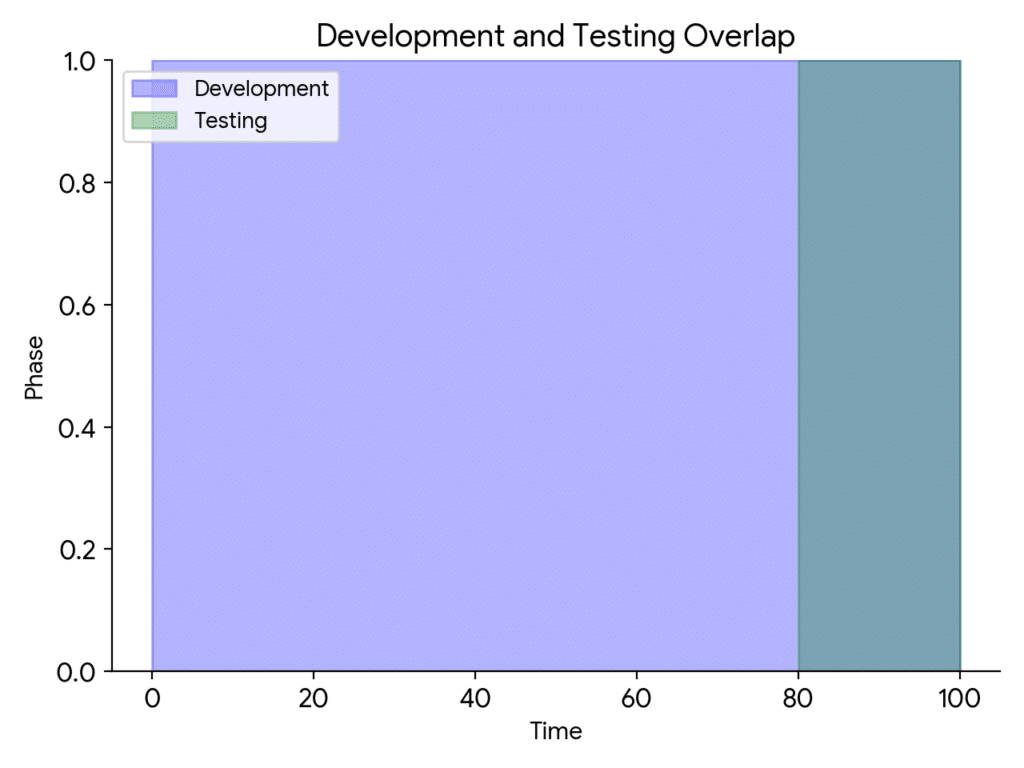

Time constraints arising from development delays

As a Q.A Engineer, this is an ongoing challenge that significantly impacts the testing process. When development encounters delays, the window for comprehensive testing narrows, potentially jeopardizing the quality of the software release. The impact of this challenge extends beyond the immediate testing phase, as it increases the risk of overlooking critical functionalities and identifying defects late in the development cycle. To overcome this challenge, efficient time management becomes paramount. Prioritizing testing activities based on risk assessment, collaborating closely with the development team to streamline processes, and implementing automated testing for repetitive tasks are crucial strategies. By adopting a risk-based testing approach and fostering collaboration between teams, we can optimize the testing process, ensuring that critical functionalities are thoroughly tested even within constrained timelines. This proactive stance helps mitigate the impact of limited testing time, contributing to a more robust and reliable software release.

To address the challenge of limited testing time due to delays in implementation, our QA team has implemented strategic approaches to optimize testing processes effectively:

- Risk-based testing: Our approach prioritizes testing activities based on potential impact, ensuring thorough testing of high-priority areas even within time constraints.

- Continuous monitoring and adjustment: We dynamically adjust testing priorities by continuously monitoring project timelines, aligning efforts with evolving project aspects.

- Parallel testing: To enhance test coverage and expedite defect identification, we execute tests simultaneously on different environments or devices, especially in time-sensitive scenarios.

- Regression test suites: Comprehensive regression test suites ensure efficient testing of new features by re-evaluating previously tested functionalities with each new implementation, minimizing regression issues.

- Clear communication: Prioritizing open and transparent communication with project stakeholders, we keep all relevant parties informed about testing progress, challenges, and potential delays to support effective decision-making amid testing time limitations.

Consistently implementing these strategies has allowed our QA team to navigate the challenge of limited testing time successfully. These approaches optimize testing processes and contribute to the efficiency and reliability of our software releases.

Ambiguous impact of code refactoring

When development teams undertake refactoring activities, the lack of clarity regarding potential impacts on existing functionalities introduces complexities into our testing processes. Understanding the extent to which these code changes might affect the overall system is often a delicate task, as the details of the refactoring may not be well-documented or apparent. This challenge compounds when attempting to identify potential areas of regression or unintended consequences. The impact of this ambiguity is significant in our testing timelines and resources, as we must invest additional effort in conducting thorough regression testing to ensure that the refactoring efforts do not unintentionally introduce defects. Balancing between encouraging code improvements and minimizing the uncertainties associated with refactoring is crucial to maintaining the integrity of our testing processes and delivering reliable software releases.

In response to the challenge of the ambiguous impact of code refactoring,” our Q.A team has implemented a set of proactive strategies to enhance our testing processes:

- Clear communication channels: Establishing transparent communication channels with the development team to gain insights into the intent and scope of code refactoring efforts, fostering a collaborative understanding.

- Thorough impact analysis: Incorporating comprehensive impact analysis into our testing methodologies to identify potential testing areas and focus regression testing on the most susceptible functionalities.

- Automated testing for regression scenarios: Leveraging automated testing to efficiently validate key functionalities and conduct repetitive regression testing, ensuring a swift and consistent evaluation of the codebase post-refactoring.

- Documentation emphasis: Encouraging developers to provide clear documentation for refactoring activities, serving as a valuable reference for QA Engineers and enhancing transparency in understanding the changes made.

By adopting these strategies, our Q.A team effectively manages the uncertainties associated with code refactoring, contributing to the efficiency, stability, and reliability of our software releases.

Conclusion

As QA teams navigate the complexity of software testing, challenges like those faced at zen8labs are likely to be encountered by teams from other technology consultancy companies. The benefits of proactive approaches and tools adopted in response to these challenges extend beyond our specific context. By sharing our experiences and successful strategies among our Q.A members and developers, we are well-equipped to overcome these challenges and ensure the delivery of reliable software releases. For more interesting insights, check this out!

Toan Tran, Quality Assurance Engineer

Get the latest blog updates directly to your inbox.

Get the latest blog updates directly to your inbox.